Next Generation (Nonlinear) Optimization Solvers

Next Generation (Nonlinear) Optimization Solvers

Project Overview

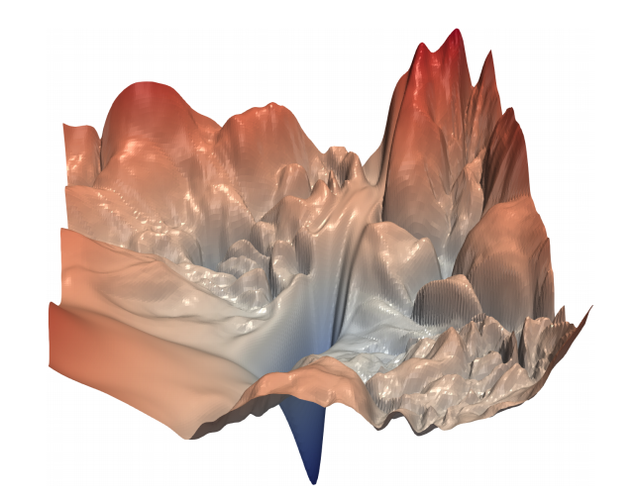

It is often challenging to get (nonlinear) optimization to converge reliably without getting stuck in spurious local minima. Better methods for handling constraints in the optimization problem and improved design of objective (cost) functions can help alleviate this problem. Leveraging new methods of data-efficient machine learning, mathematical insights from optimization theory, and computational insights from computer architecture, we are working to design robust optimization methods that can be used for real-time applications (e.g., nonlinear model predictive control for robotics).

Publications

-

(2024). Optimizing at All Scales: Edge (Non)linear Model Predictive Control from MCUs to GPUs. In Frontiers of Optimization at RSS 2024.

-

(2022). GPU Acceleration for Real-time, Whole-body, Nonlinear Model Predictive Control. Harvard University PhD Dissertation.

-

(2020). Reinforcement Learning to Enable Robust Robotic Model Predictive Control. Harvard University Bachelors of Arts Thesis.

-

(2019). Application of Approximate Matrix Multiplication to Neural Networks and Distributed SLAM. In HPEC 2019.

-

(2019). Realtime Model Predictive Control using Parallel DDP on a GPU. In Online Optimal Control Workshop at ICRA 2019.

-

(2018). A Performance Analysis of Parallel Differential Dynamic Programming on a GPU. In WAFR 2018.

-

(2018). Parallel and Constrained Differential Dynamic Programming for Model Predictive Control. Harvard University Masters of Engineering Thesis.