RoboPrec: Enabling Reliable Embedded Computing for Robotics by Providing Accuracy Guarantees Across Mixed-Precision Datatypes

Abstract

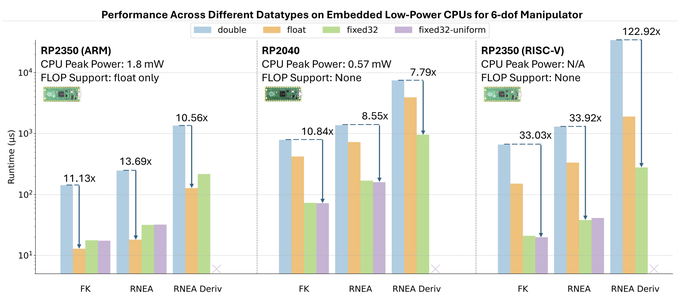

Mobile robots demand power efficiency as well as accuracy and high performance in their computations. Embedded microcontrollers and FPGAs can consume as much as 1000x less power than large CPUs and GPUs, however, these power-efficient platforms often lack full floating-point support and rely on fixed-point computations to deliver performance. This is a challenge as most robotics software uses floating-point datatypes (double, float) to conservatively ensure accuracy, and prior works that use fixed-point types employ unreliable ad hoc approaches to select the datatype precision (i.e., quantity and allocation of bits). We address this challenge with the RoboPrec framework, where we: (i) develop a transpiler that integrates code transformations and robot-specific code generation with traditional numerical stability analysis methods (which calculate error bounds), and adapts them to be practical for robotics software; and then leverage this to (ii) generate guaranteed-accuracy fixed-point code that is deployable to embedded computing platforms. We use rigid body dynamics, a fundamental robotics workload, as a motivating case study. We find that RoboPrec-generated 32-bit fixed-point code can be up to 8x faster than float and 122x faster than double on embedded processors while, critically, also providing guaranteed accuracy bounds with lower worst-case error than float.